INC 2023 L: Numbers Combination – An Analysis

This text was originally written as an Assurance of Learning assignment for the Algorithm Design and Analysis class in BINUS University. I am glad I was able to share it here!

- Abridged Problem Statement

- About the Encounter with Problem L

- Prerequisites

- Restating the Problem

- The Logarithm Trick

- Newton’s Method

- Computing Functions of Polynomials

- Algorithms and Analysis

- Wrapping It All Up

Abridged Problem Statement

The problem can be found and submitted to here. In fact, the original statement is already quite abridged.

About the Encounter with Problem L

Near the beginning of the contest – after only AC-ing three or four problems – bonk told me to read problem L. It looked like a “classic combinatorics problem”, she said.

I read the problem and quickly reduced it to finding the polynomial \((x) (x + x^2) \dots (x + x^2 + \dots + x^N)\). However, I didn’t know where to go from there.

I tried computing several small values via DP by hand, and plugged them into the OEIS. I found out that these numbers were, in fact, Mahonian numbers. After a quick search on my favorite search engine, I skimmed through several articles, but found nothing about computing a particular Mahonian number in subquadratic time.

I decided to put problem L on the back burner for now.

Fast-forward three or four hours. It was minute 278, and I had just solved problem D. bonk and trainerherp were still working on problem I. Thinking that no one had AC-ed L yet, I decided to help them both in I. Unsurprisingly, though, we did not manage to AC it.

In the end, I didn’t even get to investigate whether or not L is actually a “classic” combinatorics problem. After a little bit of after-contest discussion, I quickly realized that the polynomial coefficients I was struggling with can be found via DP. The polynomial would then be multiplied by the inverse of another polynomial, and the answer is one of the coefficients.

In this document, we will discuss an alternate approach to problem L which does not use DP.

Prerequisites

The reader is expected to know how to multiply two polynomials under a prime number modulus using number-theoretic transform (NTT).

The reader is also expected to understand Taylor series, especially the Maclaurin series of a function.

Restating the Problem

Let us see what we need to do in the problem.

- The \(i\)-th element of the sequence may take any value from \(1\) to \(i\), inclusive. In other words, there is exactly \(1\) way to make the value \(x\) where \(1 \le x \le i\).

- We want the sum of all elements to be \(K\).

We can naturally write it in its generating function form, similar to the knapsack counting problem. Let \(F_i(x) = (x + x^2 + \dots + x^i)\) be the generating function of the \(i\)-th element. Our answer is

\[\left[ x^K \right] (F_1(x) \cdot F_2(x) \cdot \dots \cdot F_N(x)).\]Let us “tidy” the forms of \(F_i(x)\). Note that \(F_i(x)\) is a geometric series of \(i\) elements whose first element and ratio are both \(x\). Therefore,

\[F_i(x) = \frac{x (1 - x^i)}{(1 - x)},\]which enables us to write the answer as

\[\left[ x^K \right] \left( \prod_{i = 1}^{N} F_i(x) \right) = \left[ x^K \right] \left( \frac{x^N}{(1 - x)^N} \prod_{i = 1}^{N} (1 - x^i) \right).\]Because our generating function has \(x^N\) as a factor, we can shift the coefficient we are looking for, \(x^K\), by \(N\), making the answer

\[\left[ x^{K - N} \right] \left( \frac{\prod_{i = 1}^{N} (1 - x^i)}{(1 - x)^N} \right).\]Of course, calculating \(\prod_{i = 1}^{N} (1 - x^i)\) naively by FFT will take \(\Theta(N^2 \log N)\) time, as the maximum degree is \(N\). We would also need a way to divide the resulting polynomial by \((1 - x)^N\).

However, we do not need to actually do all that directly!

The Logarithm Trick

What do we do when we have lots of products, and the products are not “nice” to calculate?

Remember that \(\log (ab) = \log a + \log b\), i.e. taking the logarithm allows us to transform the products into summations. It is relatively easier to sum polynomials than to multiply them, so we will follow this approach. To simplify things, we will take the natural logarithm, \(\ln\), of the polynomials.

Define \(P(x)\) as the generating function we need, \(\prod_{i = 1}^{N} (1 - x^i) / (1 - x)^N\). Taking the natural logarithm, we have

\[\begin{aligned} \ln P(x) &= \ln \frac{\prod_{i = 1}^{N} (1 - x^i)}{(1 - x)^N} \\ &= \sum_{i = 1}^{N} \left( \ln (1 - x^i) \right) - \ln (1 - x)^N \\ &= \sum_{i = 1}^{N} \left( \ln (1 - x^i) \right) - N \ln (1 - x) \end{aligned}\]as it holds that \(\ln a^k = k \ln a\).

It may not “make sense” what the logarithm of a polynomial is. However, note that we only need the first \(K - N\) terms of the “logarithm of the polynomial” when considered as a polynomial, as we are working with formal power series – we only care about the manipulation of the series and not its convergence. Thus, we can consider its Maclaurin series, specifically its first \(K - N\) terms.

The Maclaurin series for \(\ln (1 - x)\) is

\[\ln (1 - x) = -x - \frac{x^2}{2} - \frac{x^3}{3} - \dots = - \sum_{i \ge 1} \frac{x^i}{i},\]therefore

\[\ln P(x) = - \sum_{i = 1}^{N} \sum_{j \ge 1} \left( \frac{x^{ij}}{j} \right) + N \sum_{j \ge 1} \frac{x^j}{j}.\]At first glance, it seems like computing this polynomial takes \(\Theta(N^2)\) time because of the nested sum. However, it is actually faster, as the inner sum only goes from \(j = 1\) to \(\lfloor (K - N) / i \rfloor\) (remember, we only need the first \((K - N)\) terms of this polynomial). Therefore, the polynomial \(\ln P(x)\) is able to be calculated in \(\Theta((K - N) \ln (K - N))\) time as a result of the harmonic series.

Finally, to restore \(P(x)\), we can calculate \(\exp(\ln P(x))\), and to get the answer, we simply extract its \((K - N)\)-th coefficient.

All is clear, except for one deceptively simple step – how do we calculate the exponential of a polynomial?

Newton’s Method

Let us consider how to solve the equation \(F(P(x)) = 0\) for \(P\) where \(F\) and \(P\) are both polynomials. Say we know the value of \(F(x_0)\) and \(F'(x_0)\) for a particular \(x_0\). How do we approximate \(F(x)\)?

Consider the Taylor series of \(F(x)\) at \(x = x_0\):

\[F(x) = F(x_0) + (x - x_0) F'(x_0) + (x - x_0)^2 \frac{F''(x_0)}{2} + \dots\]By taking the first two terms, we are able to get a rough approximation of the function: \(F(x) \approx F(x_0) + (x - x_0) F'(x_0)\). However, notice that the terms of order \(x^2\) and above are still unequal after the approximation. Therefore, we can add a balancing term \((x - x_0)^2 G(x, x_0)\), whose value is chosen such that

\[F(x) = F(x_0) + (x - x_0) F'(x_0) + (x - x_0)^2 G(x, x_0).\]Say that we have \(P(x) = P_0(x)\) as the solution to \(F(P(x)) \equiv 0 \ (\mathrm{mod} \ x^a)\). We would like to “lift” this solution into another solution \(P_1(x)\) such that \(P_1(x) = P_0(x) + x^a Q(x)\), where \(Q(x)\) is another polynomial, and \(F(P_1(x)) \equiv 0 \ (\mathrm{mod} \ x^{2a})\).

If we set \(x_0\) as \(P_0(x)\) and \(x\) as \(P_1(x)\) in the equation above, we get

\[F(P_1(x)) \equiv F(P_0(x)) + (P_1(x) - P_0(x)) F'(P_0(x)) + (P_1(x) - P_0(x))^2 G(P_0(x), P_1(x)) \ (\mathrm{mod} \ x^{2a}).\]\(P_1(x) = P_0(x) + x^a Q(x)\) implies that \(P_1(x) - P_0(x) \equiv 0 \ (\mathrm{mod} \ x^a)\), which means that \((P_1(x) - P_0(x))^2 \equiv 0 \ (\mathrm{mod} \ x^{2a})\). Therefore, the equation above can be rewritten as

\[F(P_1(x)) \equiv F(P_0(x)) + (P_1(x) - P_0(x)) F'(P_0(x)) \ (\mathrm{mod} \ x^{2a}).\]Because \(F(P_1(x)) \equiv 0 \ (\mathrm{mod} \ x^{2a})\), we can solve for \(P_1(x)\) in the equation above, yielding

\[P_1(x) \equiv P_0(x) - \frac{F(P_0(x))}{F'(P_0(x))} \ (\mathrm{mod} \ x^{2a}).\]This result is known as Newton’s method. Using this, we can compute the first \(2^k\) coefficients of the solution for \(F(P(x)) = 0\) in \(k\) iterations, with the appropriate base case (involving the constant term).

Let us utilize this result to compute \(\exp(P(x))\).

Computing Functions of Polynomials

\(\exp(P(x))\)

We can directly use Newton’s method. We would like to find a solution \(Q(x)\) to \(\exp(P(x)) = Q(x)\), i.e. \(P(x) - \ln Q(x) = 0\). Therefore,

\[\begin{aligned} F(Q(x)) &= P(x) - \ln Q(x), \text{ and} \\ F'(Q(x)) &= - \frac{1}{Q(x)}. \end{aligned}\]Our recurrence relation is then

\[\begin{aligned} Q_k(x) &= Q_{k-1}(x) - \frac{P(x) - \ln Q_{k-1}(x)}{-1 / Q_{k-1}(x)} \\ &= Q_{k-1}(x) (P(x) - \ln Q_{k-1}(x) + 1). \end{aligned}\]Another problem arises: How do we compute \(\ln P(x)\) for an arbitrary polynomial \(P\)? Our Maclaurin series approach does not work here, as we would need to calculate polynomial powers, which would be inefficient.

\(\ln P(x)\)

There is a simpler way to calculate \(\ln P(x)\). We know that

\[\frac{\mathrm{d}}{\mathrm{d}x} \ln P(x) = \frac{P'(x)}{P(x)},\]which means that

\[\ln P(x) = \int \frac{P'(x)}{P(x)} \mathrm{d}x.\]Integrating a polynomial is quite straightforward and can be done in linear time.

A final problem that we need to solve would be finding the inverse of a polynomial.

\(1 / P(x)\)

We can again use Newton’s method. We would like to find a solution \(Q(x)\) to \(1 / P(x) = Q(x)\), i.e. \(P(x) - 1 / Q(x) = 0\). Therefore,

\[\begin{aligned} F(Q(x)) &= P(x) - \frac{1}{Q(x)}, \text{ and} \\ F'(Q(x)) &= \frac{1}{(Q(x))^2}. \end{aligned}\]Our recurrence relation is then

\[\begin{aligned} Q_k(x) &= Q_{k-1}(x) - \frac{P(x) - 1 / Q_{k-1}(x)}{1 / (Q_{k-1}(x))^2} \\ &= Q_{k-1}(x) (2 - P(x) Q_{k-1}(x)). \end{aligned}\]With these three recurrence relations, we are finally ready to compute \(\exp(P(x))\).

Algorithms and Analysis

In this section, a sketch of the algorithms used to compute the final answer will be presented. We will assume that all operations on the coefficients are done modulo \(998\,244\,353\) as stated in the problem. We also assume that the reader has implemented the function \({\rm M{\small ULTIPLY}}(A, B)\), which multiplies two polynomials \(A\) and \(B\) of degree \(D\) in \(\Theta(D \log D)\) time via NTT.

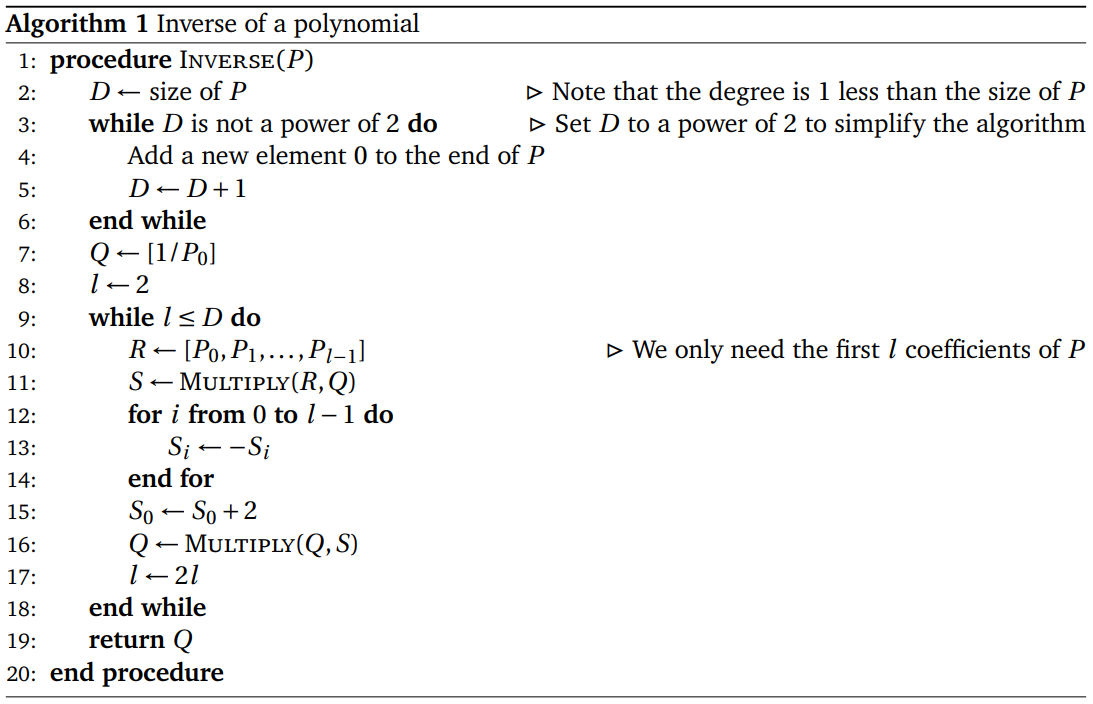

We will start with computing \(1 / P(x)\). In all algorithms below, the polynomials are represented as dynamic-length arrays.

For a polynomial of degree \(D\), the time complexity of \({\rm I{\small NVERSE}}(P)\) is \(\sum_{i = 1}^{\log D} \Theta \left( 2^i \log (2^i) \right)\). This is equivalent to solving the recurrence

\[T(D) = T \left( \frac{D}{2} \right) + \Theta(D \log D),\]whose solution is \(T(D) = \Theta(D \log D)\).

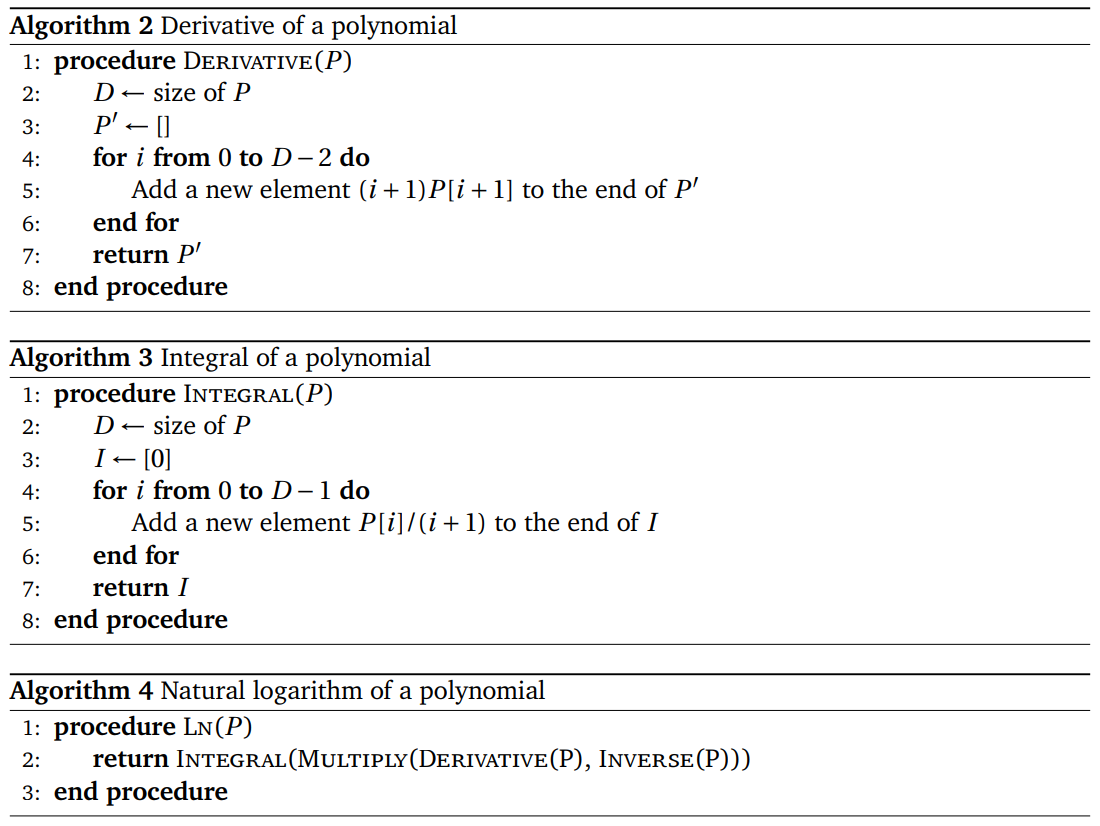

The next step is computing \(\ln P(x)\), which requires computing the derivative and integral of a polynomial.

It is easy to see that \({\rm D{\small ERIVATIVE}}(P)\) and \({\rm I{\small NTEGRAL}}(P)\) both run in \(\Theta(D)\) time. Therefore, \({\rm L{\small N}}(P)\) runs in \(\Theta(D \log D)\) time due to the \({\rm M{\small ULTIPLY}}\) and \({\rm I{\small NVERSE}}\) calls.

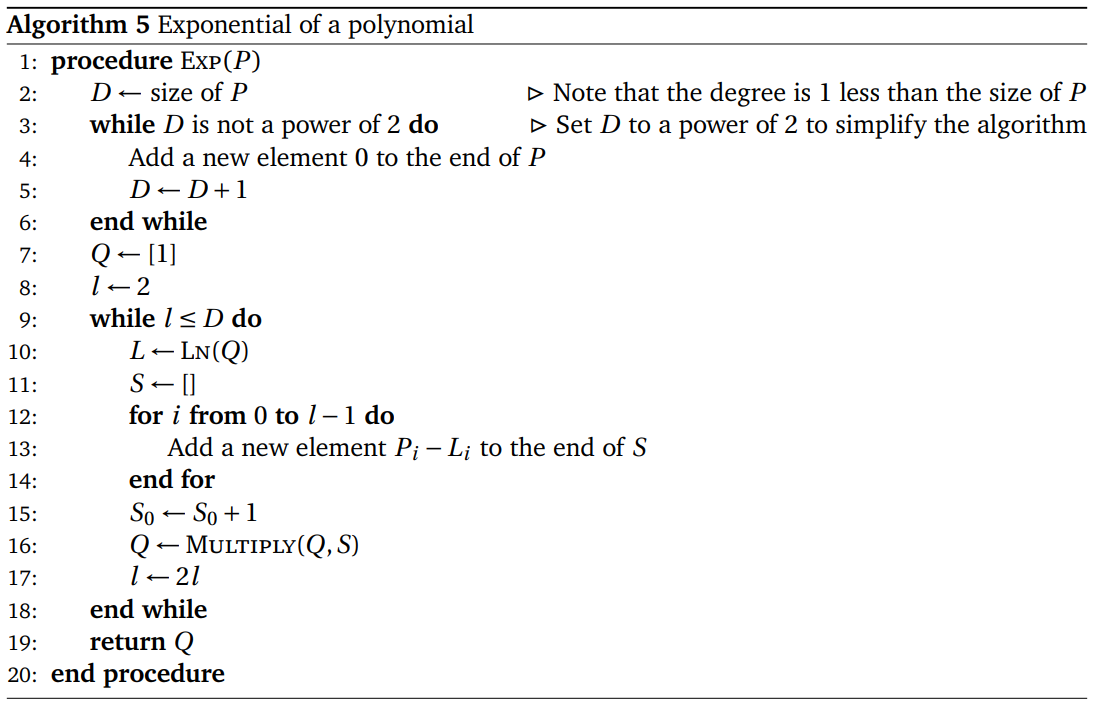

We are now ready to compute \(\exp(P)\).

Similar to \({\rm I{\small NVERSE}}(P)\), the time complexity of \({\rm E{\small XP}}(P)\) is \(\sum_{i = 1}^{\log D} \Theta \left( 2^i \log (2^i) \right)\) = \(\Theta(D \log D)\).

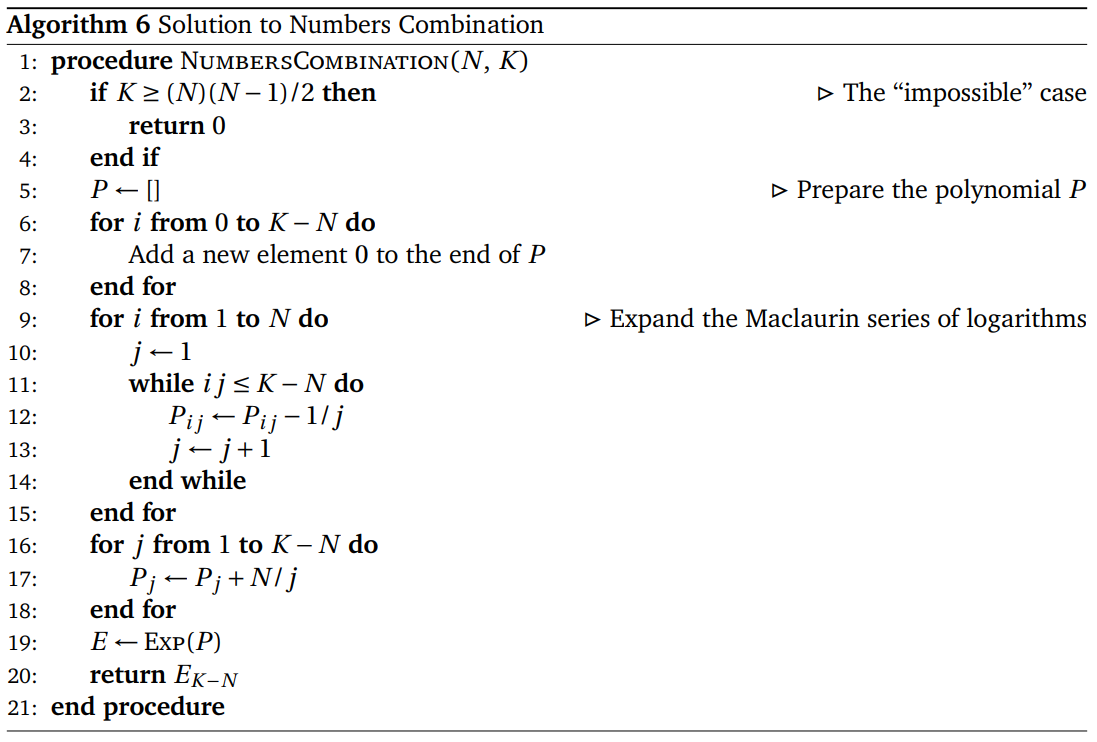

Finally, we are ready to find the final answer.

As discussed above, this algorithm runs in \(\Theta((K - N) \log (K - N))\) time due to the presence of the harmonic series in the while loop as well as the \({\rm E{\small XP}}\) call.

Therefore, the problem has been solved in \(\Theta((K - N) \log (K - N))\) time.

A submission to the problem in C++ can be found here for reference.

Wrapping It All Up

It is worth noting that the functions of polynomials discussed in this text – \(\exp(P(x))\), \(\ln P(x)\), and \(1 / P(x)\) – are commonly found as “black-box” algorithms in ICPC team notebooks. Of course, this essentially eliminates a large amount of discussion in this text during the process of solving the problem. Due to that reason as well, some people might not consider this problem a “novel” problem in the field of competitive programming, among a large number of other generating function problems.

Despite all of the above, analyzing this problem has familiarized me to the logarithm trick and Newton’s method. I do hope that the readers of this text, especially who have not frequently encountered generating function problems, are able to gain some insight or interest in the techniques commonly used in said problems.